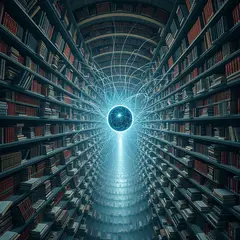

Extending the Limits: Alibaba’s Qwen2.5-Turbo and the 1M Token Milestone

Alibaba Cloud’s Qwen team has unveiled Qwen2.5-Turbo, a groundbreaking update to their language model that extends context length to 1 million tokens. This advancement enables processing of vast amounts of text equivalent to 10 full-length novels or 30,000 lines of code. The model demonstrates superior performance in long-text comprehension tasks, outperforming competitors like GPT-4 on benchmarks such as RULER. Notably, Qwen2.5-Turbo achieves a 4.3x speedup in processing time through sparse attention mechanisms while maintaining cost-effectiveness. Despite these improvements, the team acknowledges challenges in long sequence task performance and plans further optimizations. This release marks a significant step forward in AI’s capability to handle and understand extensive contextual information.